CAPIO: Cross-Application Programmable I/O

High-performance computing (HPC) systems often face I/O bottlenecks, especially in data-intensive workflows. While distributed file systems offer parallel I/O capabilities, issues such as accessing numerous files or performing small random read/write operations can lead to slowdowns or denial of service. Traditional workflows separate simulation and post-processing, but in-situ workflows integrate these steps, allowing real-time data analysis.

Adopting in-situ workflows typically requires modifying existing applications to use new I/O APIs, which is challenging for workflows involving diverse technologies and teams. To simplify this, CAPIO was developed. CAPIO enables concurrent execution of workflow steps without modifying application code. It manages synchronization between steps and provides an I/O coordination language for defining data dependencies and synchronization semantics.

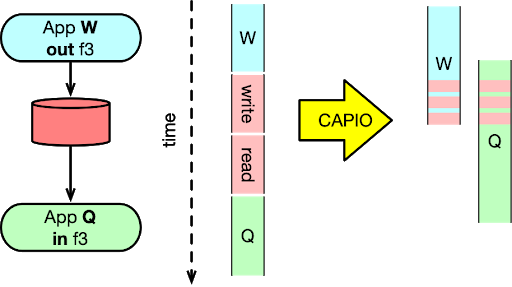

Using CAPIO, workflows achieve streaming execution with the same results as traditional batch execution, facilitating efficient and seamless inter-step communication.

Key Features of CAPIO

Below are the main features of CAPIO, its objectives, and how it achieves them:

I/O Coordination Language and Runtime: CAPIO comprises an I/O coordination language and a runtime that implements it.

Enhanced I/O Performance: CAPIO transforms traditional workflows, which rely on file-based communication, into streaming workflows where all steps are executed concurrently.

No Code Modifications Required: CAPIO eliminates the need for modifications to the applications within the workflow.

Configuration-Based Execution: Users provide the CAPIO runtime with a configuration file written in the CAPIO language, which defines file synchronization semantics for streaming communication.

Concurrent File Access: The CAPIO language specifies the synchronization required for workflow steps to read and write files concurrently, even if originally programmed for sequential execution.

The CAPIO Runtime

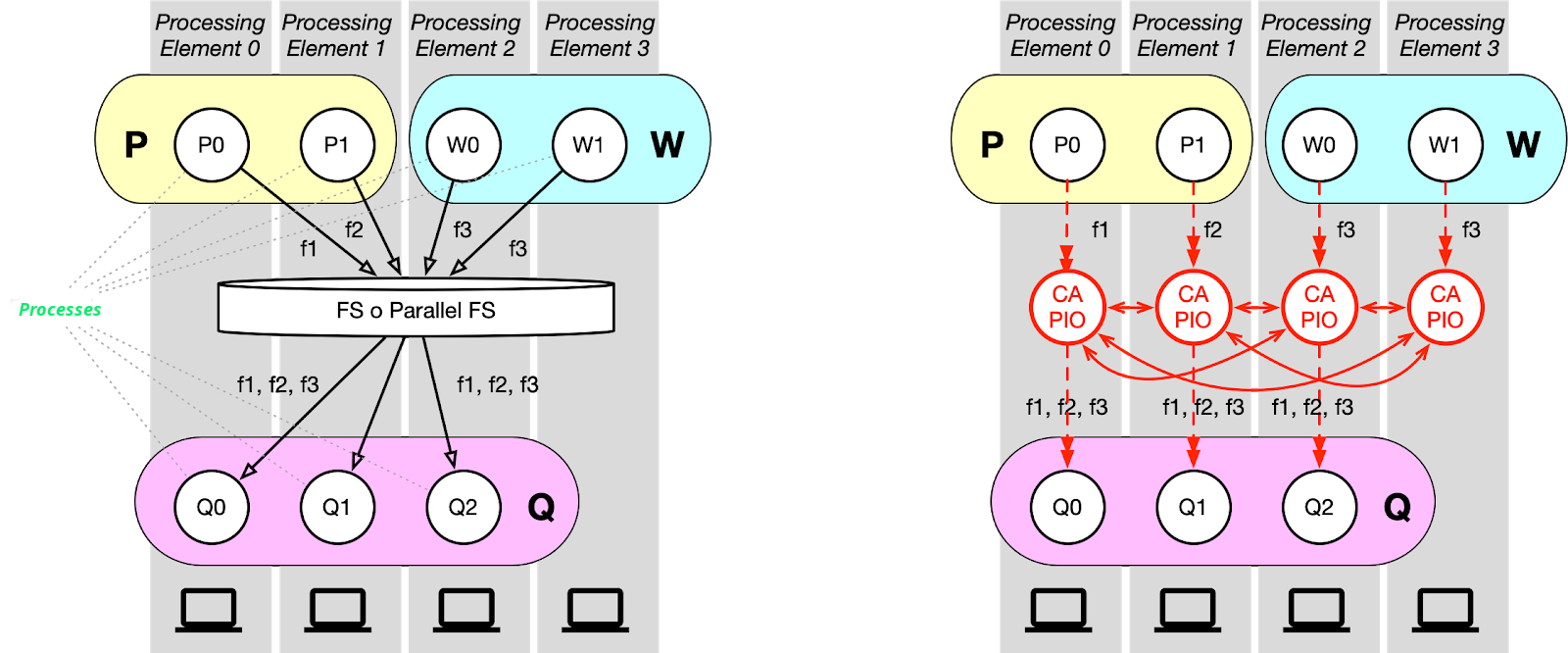

The CAPIO runtime consists of a collection of user-space servers per node, which implement an ad hoc distributed data storage system for a specified workflow application. Utilizing information from the input configuration file, CAPIO enforces the streaming movement of data files between different workflow steps.

The software architecture of CAPIO is depicted in the figure below and is implemented in modern C++, leveraging MPI for inter-node communication. CAPIO server processes are currently deployed on a node cluster using the mpirun launcher. Users define a CAPIO local-node mount point (capio_mnt) for each cluster node through the CAPIO_DIR environment variable. CAPIO captures all I/O system calls executed on files and directories created within the capio_mnt directory.

System calls directed at files outside the CAPIO local mount point are disregarded by CAPIO and forwarded to the kernel. The CAPIO implementation accommodates both multi-process and multi-threaded applications, allowing I/O calls to the same or different files to be executed by different processes or threads within the same application process.

The CAPIO intercept library, implemented using the system call intercepting library syscall_intercept, functions as a shared library dynamically linked to the workflow steps. This linking occurs through the LD_PRELOAD dynamic linker environment variable, enabling the library to capture I/O system calls executed on files and directories within the CAPIO local-node mount point. By capturing these system calls, the CAPIO runtime enforces synchronization semantics, transforming a batch workflow into a streaming workflow without requiring any modifications to the code.

If you are interested in exploring more details about the CAPIO runtime and how to write a configuration file to enable streaming communication in a workflow without modifying the code, you can find more details in the paper “CAPIO: a Middleware for Transparent I/O Streaming in Data-Intensive Workflows” or in my PhD thesis.

CAPIO is currently maintained and developed by the Parallel Computing Research Group of the University of Turin in collaboration with the University of Pisa. If you want to keep up with the latest news, you can check out the website or the CAPIO GitHub repository.